Writing unit tests can be difficult, time-consuming, and slow when you can't isolate the classes you want to test from the rest of the system. In this course, Mocking in .NET Core Unit Tests with Moq: Getting Started, you'll learn how to create mocks and use them as dependencies to the classes you want to test. First, you'll discover how to configure mocked methods and properties to return specific values. Next, you'll cover how to perform behavior/interaction testing. Finally, you'll explore how to set up mocked exceptions and events. When you're finished with this course, you'll have the necessary knowledge to use Moq to unit test your classes in isolation by creating and using mock objects.

Setup the Project

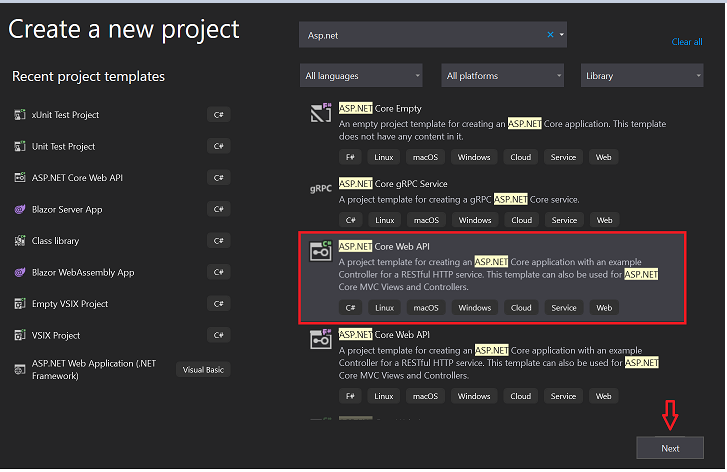

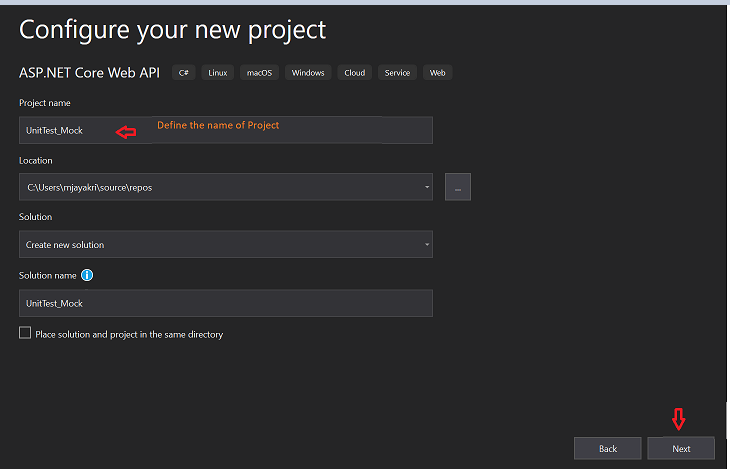

Let's create a sample web API Project with basic crud operations using EF Core code first approach.

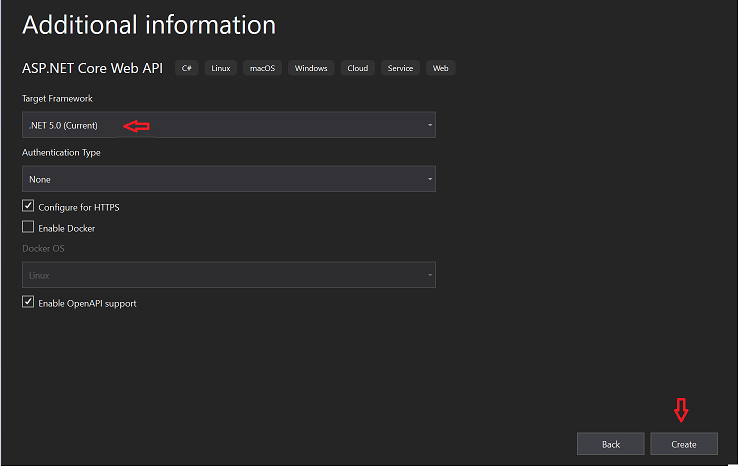

Since .Net 5.0 installed on my machine so that I am going with the latest template we can choose what version we are comfortable with.

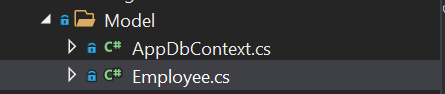

Create the Model Folder and inside will configure the Model class and DbContext for the EntityFramework Core Code First approach setup.

Employee.cs

using System;

using System.Collections.Generic;

using System.ComponentModel.DataAnnotations;

using System.Linq;

using System.Threading.Tasks;

namespace UnitTest_Mock.Model

{

public class Employee

{

[Key]

public int Id { get; set; }

public string Name { get; set; }

public string Desgination { get; set; }

}

}

AppDbContext.cs

using Microsoft.EntityFrameworkCore;

using System;

using System.Collections.Generic;

using System.Linq;

using System.Threading.Tasks;

namespace UnitTest_Mock.Model

{

public partial class AppDbContext : DbContext

{

public AppDbContext(DbContextOptions<AppDbContext> options) : base(options)

{

}

public DbSet<Employee> Employees { get; set; }

}

}

Let's set up the connection string to perform the code first operations.

appsettings.json

{

"Logging": {

"LogLevel": {

"Default": "Information",

"Microsoft": "Warning",

"Microsoft.Hosting.Lifetime": "Information"

}

},

"AllowedHosts": "*",

"ConnectionStrings": {

"myconn": "server=Your server name; database=UnitTest;Trusted_Connection=True;"

}

}

Startup.cs

using Microsoft.AspNetCore.Builder;

using Microsoft.AspNetCore.Hosting;

using Microsoft.AspNetCore.HttpsPolicy;

using Microsoft.AspNetCore.Mvc;

using Microsoft.EntityFrameworkCore;

using Microsoft.Extensions.Configuration;

using Microsoft.Extensions.DependencyInjection;

using Microsoft.Extensions.Hosting;

using Microsoft.Extensions.Logging;

using Microsoft.OpenApi.Models;

using System;

using System.Collections.Generic;

using System.Linq;

using System.Threading.Tasks;

using UnitTest_Mock.Model;

using UnitTest_Mock.Services;

namespace UnitTest_Mock

{

public class Startup

{

public Startup(IConfiguration configuration)

{

Configuration = configuration;

}

public IConfiguration Configuration { get; }

// This method gets called by the runtime. Use this method to add services to the container.

public void ConfigureServices(IServiceCollection services)

{

services.AddControllers();

services.AddSwaggerGen(c =>

{

c.SwaggerDoc("v1", new OpenApiInfo { Title = "UnitTest_Mock", Version = "v1" });

});

#region Connection String

services.AddDbContext<AppDbContext>(item => item.UseSqlServer(Configuration.GetConnectionString("myconn")));

#endregion

services.AddScoped<IEmployeeService, EmployeeService>();

}

// This method gets called by the runtime. Use this method to configure the HTTP request pipeline.

public void Configure(IApplicationBuilder app, IWebHostEnvironment env)

{

if (env.IsDevelopment())

{

app.UseDeveloperExceptionPage();

app.UseSwagger();

app.UseSwaggerUI(c => c.SwaggerEndpoint("/swagger/v1/swagger.json", "UnitTest_Mock v1"));

}

app.UseHttpsRedirection();

app.UseRouting();

app.UseAuthorization();

app.UseEndpoints(endpoints =>

{

endpoints.MapControllers();

});

}

}

}

Create the tables by using the below commands in the console.

Step 1

To create a migration script

PM> Add-Migration 'Initial'

Step 2

To execute the script in SQL Db

PM> update-database

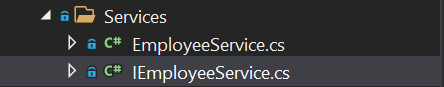

Create a Services folder where we perform our business logic for all the operations.

EmployeeService.cs

using System;

using System.Collections.Generic;

using System.Linq;

using System.Threading.Tasks;

using UnitTest_Mock.Model;

using Microsoft.EntityFrameworkCore;

namespace UnitTest_Mock.Services

{

public class EmployeeService : IEmployeeService

{

#region Property

private readonly AppDbContext _appDbContext;

#endregion

#region Constructor

public EmployeeService(AppDbContext appDbContext)

{

_appDbContext = appDbContext;

}

#endregion

public async Task<string> GetEmployeebyId(int EmpID)

{

var name = await _appDbContext.Employees.Where(c=>c.Id == EmpID).Select(d=> d.Name).FirstOrDefaultAsync();

return name;

}

public async Task<Employee> GetEmployeeDetails(int EmpID)

{

var emp = await _appDbContext.Employees.FirstOrDefaultAsync(c => c.Id == EmpID);

return emp;

}

}

}

IEmployeeService.cs

using System;

using System.Collections.Generic;

using System.Linq;

using System.Threading.Tasks;

using UnitTest_Mock.Model;

namespace UnitTest_Mock.Services

{

public interface IEmployeeService

{

Task<string> GetEmployeebyId(int EmpID);

Task<Employee> GetEmployeeDetails(int EmpID);

}

}

Define these services in Startup. cs file which I have already highlighted in the above-mentioned startup.cs file.

Create API methods for those services in the controller class.

EmployeeController.cs

using Microsoft.AspNetCore.Mvc;

using System;

using System.Collections.Generic;

using System.Linq;

using System.Threading.Tasks;

using UnitTest_Mock.Model;

using UnitTest_Mock.Services;

namespace UnitTest_Mock.Controllers

{

[Route("api/[controller]")]

[ApiController]

public class EmployeeController : ControllerBase

{

#region Property

private readonly IEmployeeService _employeeService;

#endregion

#region Constructor

public EmployeeController(IEmployeeService employeeService)

{

_employeeService = employeeService;

}

#endregion

[HttpGet(nameof(GetEmployeeById))]

public async Task<string> GetEmployeeById(int EmpID)

{

var result = await _employeeService.GetEmployeebyId(EmpID);

return result;

}

[HttpGet(nameof(GetEmployeeDetails))]

public async Task<Employee> GetEmployeeDetails(int EmpID)

{

var result = await _employeeService.GetEmployeeDetails(EmpID);

return result;

}

}

}

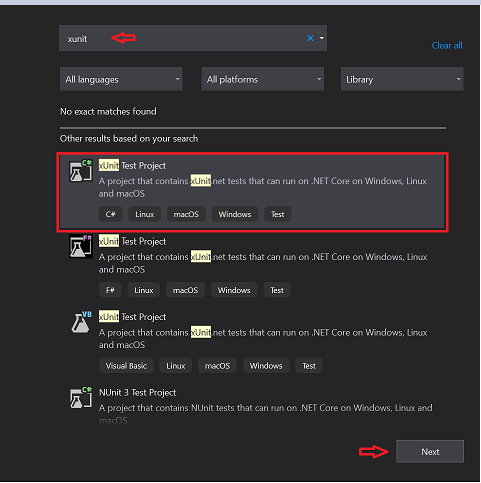

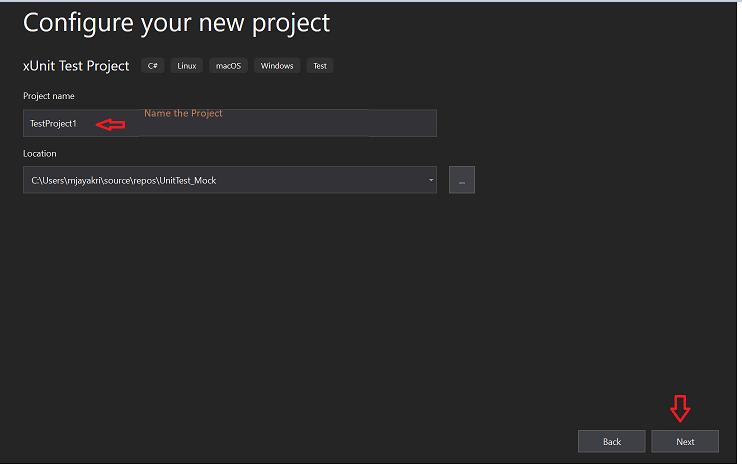

Let us create another testing project inside this solution project where we can write test cases for those functions

Right-click on the Solution

Click on Add - New project

Search for X-Unit Test project.

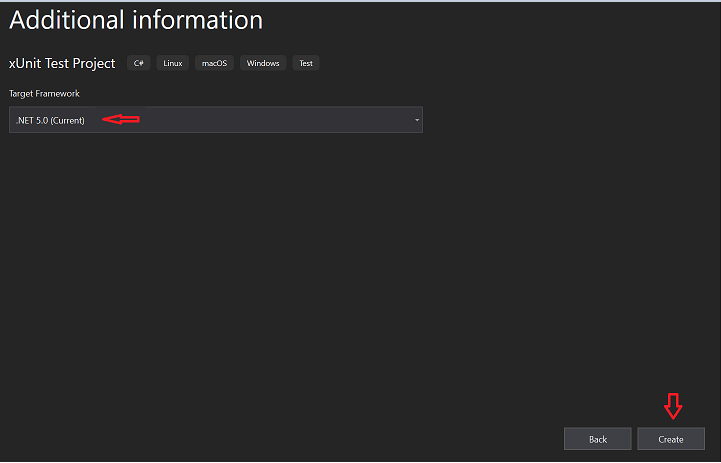

Choose the target framework same as where we have used in our API project.

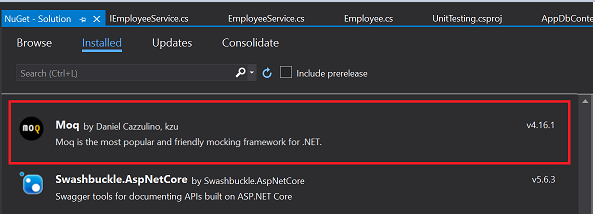

Install the Moq package inside this unit test project.

Create a class inside this Test project to define all our respective test cases but before that, we have to insert data into the table which we have created. Open the SQL Server and insert dummy data to the employee table.

EmployeeTest.cs

using Moq;

using UnitTest_Mock.Controllers;

using UnitTest_Mock.Model;

using UnitTest_Mock.Services;

using Xunit;

namespace UnitTesting

{

public class EmployeeTest

{

#region Property

public Mock<IEmployeeService> mock = new Mock<IEmployeeService>();

#endregion

[Fact]

public async void GetEmployeebyId()

{

mock.Setup(p => p.GetEmployeebyId(1)).ReturnsAsync("JK");

EmployeeController emp = new EmployeeController(mock.Object);

string result = await emp.GetEmployeeById(1);

Assert.Equal("JK", result);

}

[Fact]

public async void GetEmployeeDetails()

{

var employeeDTO = new Employee()

{

Id = 1,

Name = "JK",

Desgination = "SDE"

};

mock.Setup(p => p.GetEmployeeDetails(1)).ReturnsAsync(employeeDTO);

EmployeeController emp = new EmployeeController(mock.Object);

var result = await emp.GetEmployeeDetails(1);

Assert.True(employeeDTO.Equals(result));

}

}

}

setting up the mock for our API business services under the controller level to check the result and compare with user-defined values.

we can debug the test cases to check the output in running mode.

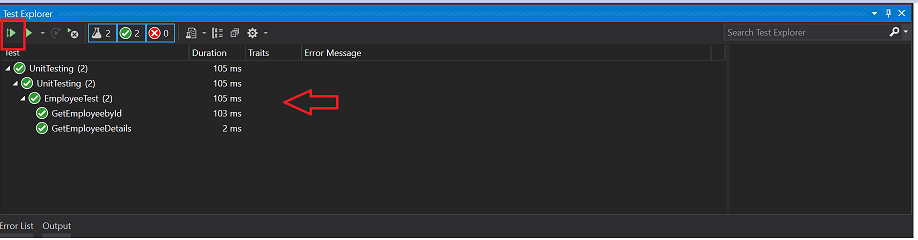

Run all the test cases to verify whether they are passed or failed.

Click on View in the Top left

Click on Test explorer.

In the above image, we can see all our test cases are passed and their time duration as well. Hope this article helps you in understanding unit testing using the Mock object.