August 12, 2022 08:06 by

Peter

PeterThis is a series of two articles that will help you to learn about rate limiting and how can it be applied in a microservice or .net core API.

It will cover the following topics:

What is Rate limiting? (Covered in the last article)

Why do we use Rate limiting? (Covered in the last article)

Real-world use-case of Rate limiting (Covered in the last article)

Hands-on Lab – Create a WebAPI project and execute the API using swagger (Covered in the last article)

Hands-on Lab – Implement Rate limiting using the AspNetCoreRateLimit Nuget Package (Covered in the last article)

Difference between Rate Limiting and Throttling

Hands-on Lab – Implement Rate limiting using a Custom Middleware

Difference between Rate Limiting and Throttling

Rate-Limiting refers to the broader concept of restricting the request traffic to an API endpoint at any point in time.

Throttling is a particular process of applying rate-limiting to an API endpoint.

There are other ways an API endpoint can apply rate-limiting. One such way is the use of Request Queues. This process queues the incoming requests. It then serves them to the API endpoint at a rate that the API can process gracefully.

However, in Throttling, the API endpoint presents the consumer with a status code to indicate the restriction to send any more requests within the specific time window. The client application can then retry after the time window passes

Hands-on Lab – Implement Rate limiting using a Custom Middleware

Steps to be followed:

In the existing asp.net core API application created in the last article, let’s use an attribute to decorate the endpoint that we want to rate limit.

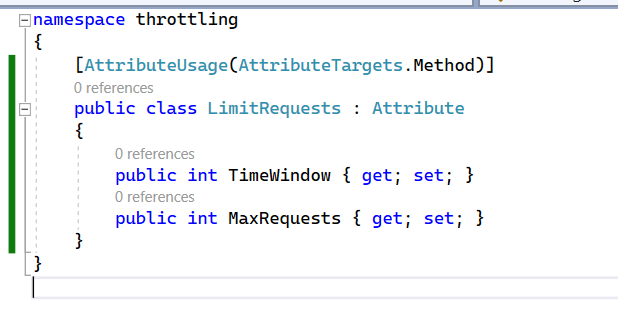

Add an attribute class named LimitRequests. This attribute applies only to methods. The two properties in the attribute indicate the max requests allowed within a specific time window. The attribute approach gives us the flexibility to apply different rate-limiting configurations for different endpoints within the same API.

Let’s apply the LimitRequests decorator to our endpoint and configure it to allow a maximum of two requests for a window of 50 seconds. A third request within 50 seconds would not be allowed.

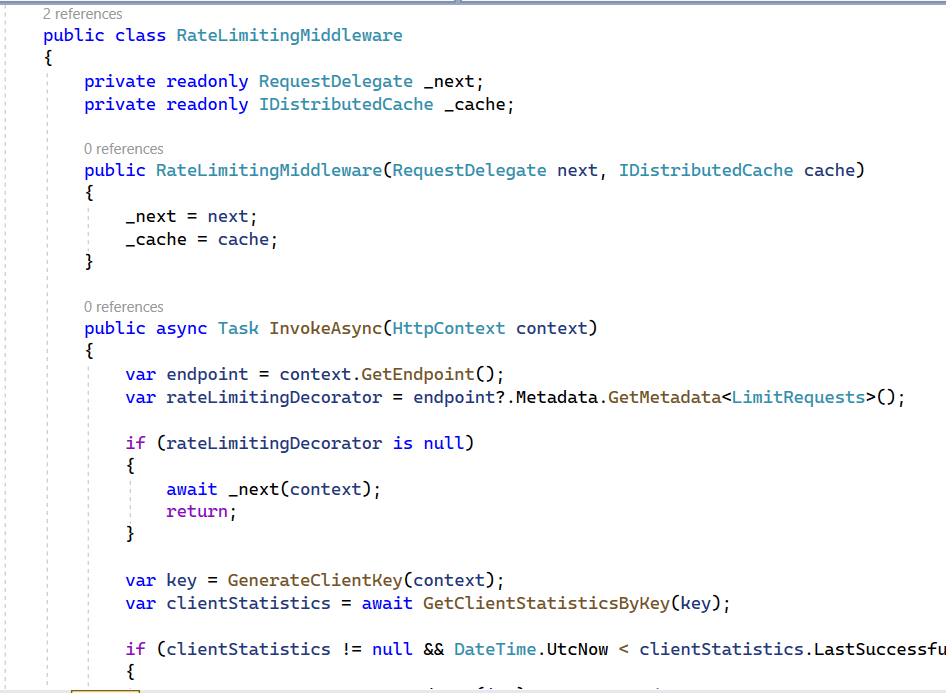

Now, implement a middleware using a distributed cache to store NumberOfRequestsCompletedSuccessfully and LastSuccessfulResponseTime. Using the values of the above parameter from the distributed cache and matching it with the attribute on API, return the valid response or HTTPResponse with HTTPStatusCode - 429.

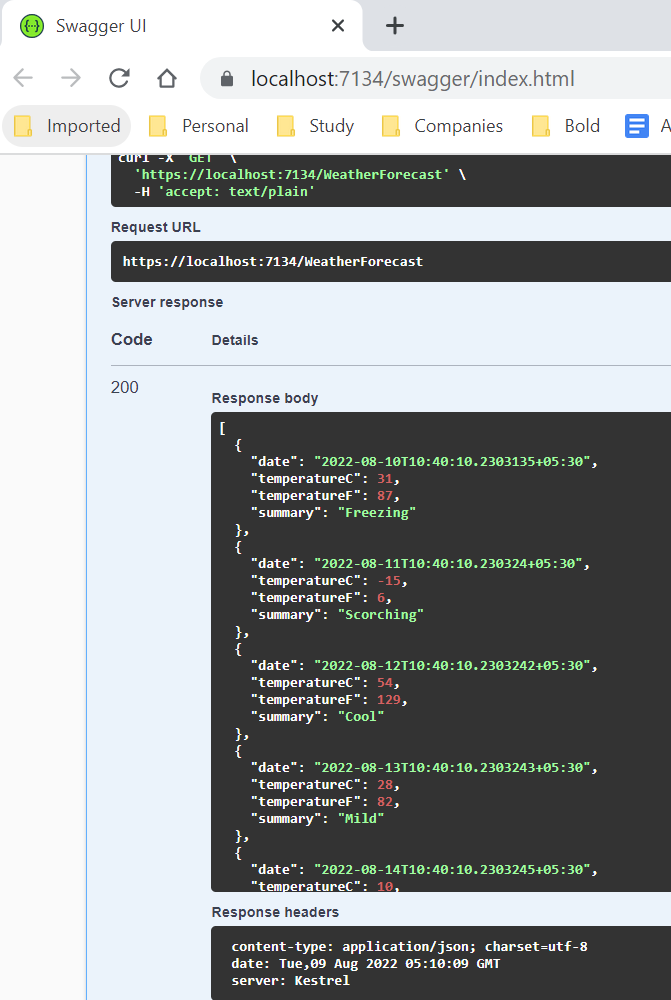

Execute the application (Press F5) and execute the API from swagger for the first and second time. Look to the below screenshot:

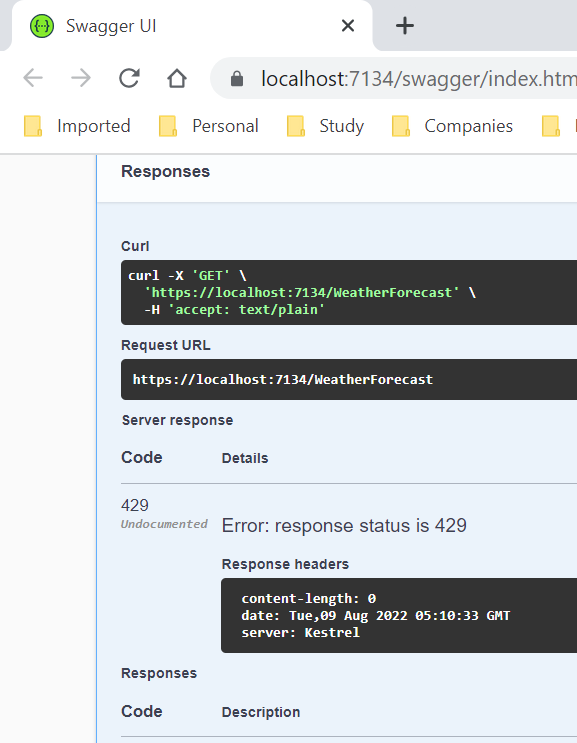

Execute the API from swagger for the third time, and as you can see the below screenshot, the quota for API calls for that particular API has been exceeded.

This is how we implement rate limiting using Custom Middleware, along with the difference between throttling and rate limiting. In the series of two articles, we have covered both approaches to implementing rate limiting on .NET Core API. I've attached the source for your reference. I hope you see you again in the next article.

Happy learning!

European best, cheap and reliable ASP.NET hosting with instant activation. HostForLIFE.eu is #1 Recommended Windows and ASP.NET hosting in European Continent. With 99.99% Uptime Guaranteed of Relibility, Stability and Performace. HostForLIFE.eu security team is constantly monitoring the entire network for unusual behaviour. We deliver hosting solution including Shared hosting, Cloud hosting, Reseller hosting, Dedicated Servers, and IT as Service for companies of all size.