July 30, 2021 05:35 by

Peter

PeterA cache is a temporary storage used to store the results of frequently accessed data so that subsequent requests load faster. Most applications use the database as the main storage. Every time you load user data, one or more database calls are executed to get the particular data, which can hamper the performance.

Advantages

One of the key benefits of having a separate cache is the ability to scale the cache system independently.

The use of cache reduces latency for active data. This results in higher performance for a system or application.

It reduces the overhead from server resources.

It increases reliability

Disadvantage

Caching is not recommended for the system that performs more write operation than the read operation because every time to perform a write operation, you also have to update the cache.

Recommendations

Consistency

Keep the main data source and cache in sync, usually, inconsistency occurs when the write operation on the main data storage and the write/update on cache is not in a single transaction.

Eviction Policy

If you keep adding the items in the cache, there would be a time when you face a memory out-of-bounds exception so it’s recommended to clear the unwanted keys/items on the basis of eviction policies. There are multiple policies available to remove the keys from the cache, the most used is the Least-recently-used (LRU), which allows you to quickly identify which item hasn’t been used for the longest amount of time and removes it. Other eviction policies are Least Frequently Used (LFU), First in First Out (FIFO).

Single Point of Failure(SPOF)

Single point of failure refers to a part of the system which in the case failed will hamper the whole system and can entirely stop the system. To avoid this, deploy multiple cache systems across the different data centers/locations.

Expiration

It’s a good practice to have an expiration policy against every item. If you don’t provide it, the cached data will remain in the memory permanently or until the system restarts. There are different ways you can have an expiration policy. One of them is Absolute Time. In this, we provide the expiry time against every time and it will expire in that given time. Suppose you have set 5 mins absolute expiry time for item1. As soon as the 5 mins are over for item1. The system will remove the item1 from the cache and calls the eviction call back function. The other option is the Sliding Time, it will ensure that if the item is accessed within the specified time interval, the cache time span will be extended by the same interval value.

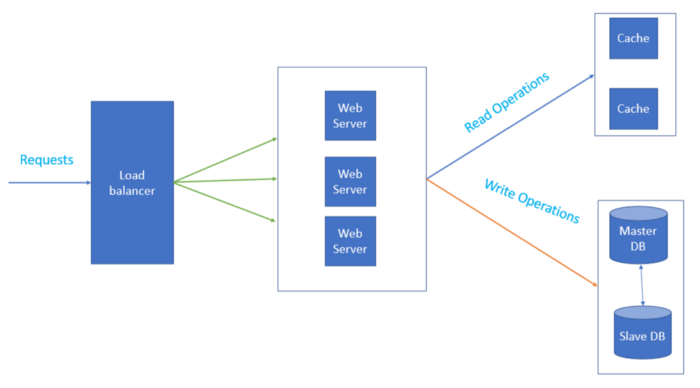

The above diagram is high level, we have used a load balancer to distribute the load among different web servers. All the write operations are redirected to the Master DB (SQL Server, NoSql, etc) and all the read operations are handled by the cache.