Have you ever wanted the weather info for your app without all of the HttpClient stuff?

You are in the right place.

I've got an amazing yet beautiful plugin for you!

Let's get started!

Open up a new project or use the project which you are currently working on.

Today I am going to use a console project.

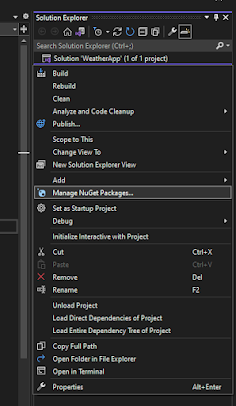

As always right click on the solution and click on manage nuget packages.

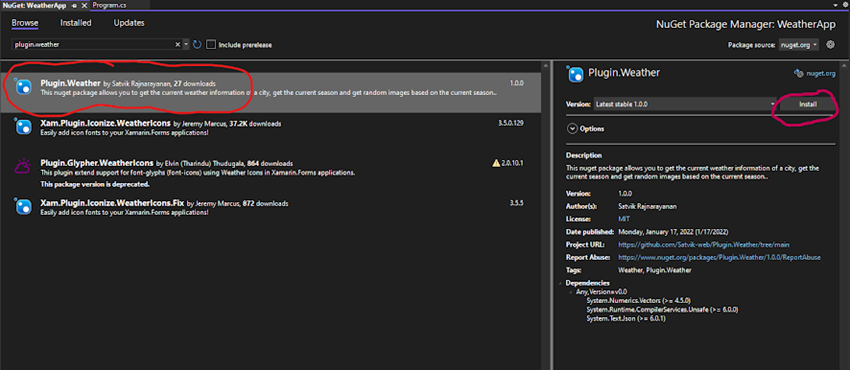

Search for plugin.weather and install the first package.

Add the following namespaces to your file.

using Plugin.Weather;

Let's first get the weather of current time using the city.

For that use the following code.

Weather weather = Api.GetWeatherByCity("City Name");

Now we can access all the weather information using the "weather" object.

The below image shows the information which we can access using the weather object.

Now I am going to write all the information to the console. You can also get the weather image URL and save the image as Png/Jpg/Gif Format.

Code

Weather weather = Api.GetWeatherByCity("City Name");

Console.WriteLine($"Temperature: {weather.Temperature}, " +

$"Humidity: {weather.Humidity}, Looks like Day? {weather.IsDay}," +

$" Latitude: {weather.Latitude}, Longitude: {weather.Longitude}, " +

$"State: {weather.State}, Country: {weather.Country}," +

$" Weather: {weather.Weather_Current}, Cloud Cover {weather.Cloud_Cover}," +

$" Time Zone: {weather.TimeZone}, Wind Speed {weather.Wind_Speed}," +

$" Wind Degree {weather.Wind_Degree}, Wind Direction: {weather.Wind_Direction}, " +

$"Pressure: {weather.Pressure}, Icon Url: {weather.Weather_Icon_url}");

weather.Save_Weather_Icon_As_Jpg("C:\\weather");

Console.WriteLine("Press Enter to continue...");

Console.Read();

Let's take a city. Example: Wellington.

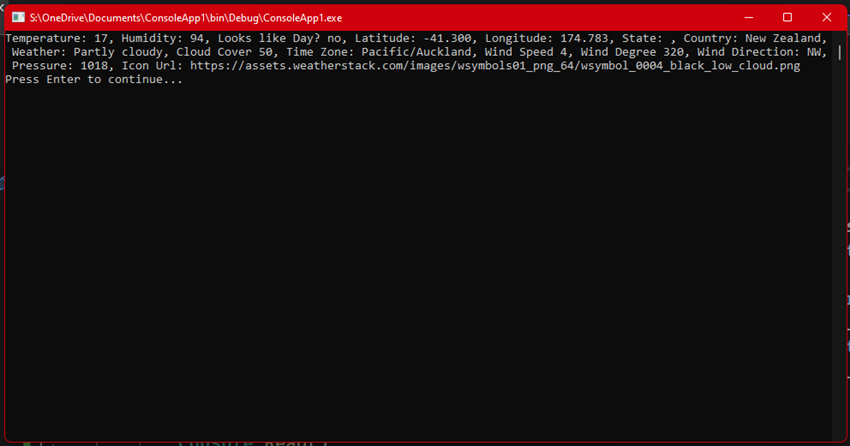

Output

We have got the following information for Wellington, New Zealand.

Temperature: 17,

Humidity: 94,

Looks like Day? no,

Latitude: -41.300,

Longitude: 174.783, State: ,

Country: New Zealand,

Weather: Partly cloudy,

Cloud Cover 50,

Time Zone: Pacific/Auckland,

Wind Speed 4,

Wind Degree 320,

Wind Direction: NW, Pressure: 1018,

Icon Url: https://assets.weatherstack.com/images/wsymbols01_png_64/wsymbol_0004_black_low_cloud.png

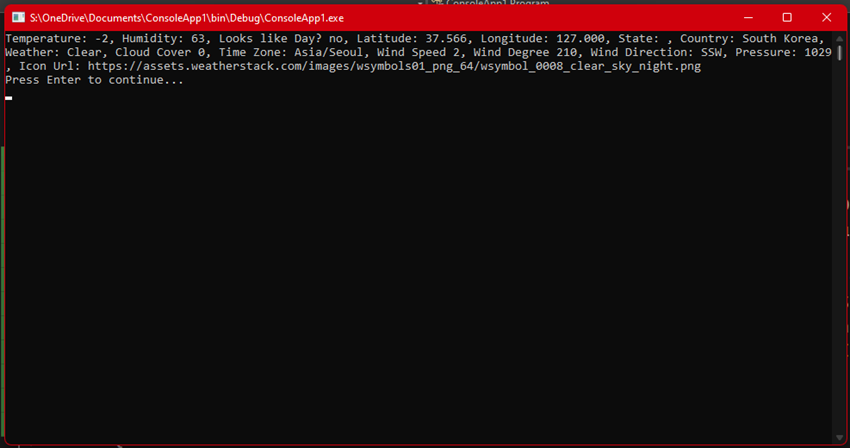

Let's take a look at a city in the northern hemisphere. Example: Seoul

Output

We have got the following information for Seoul, South Korea.

Temperature: -2,

Humidity: 63,

Looks like Day? no,

Latitude: 37.566,

Longitude: 127.000,

State: null,

Country: South Korea,

Weather: Clear,

Cloud Cover 0,

Time Zone: Asia/Seoul,

Wind Speed 2,

Wind Degree 210,

Wind Direction: SSW,

Pressure: 1029,

Icon Url: https://assets.weatherstack.com/images/wsymbols01_png_64/wsymbol_0008_clear_sky_night.png

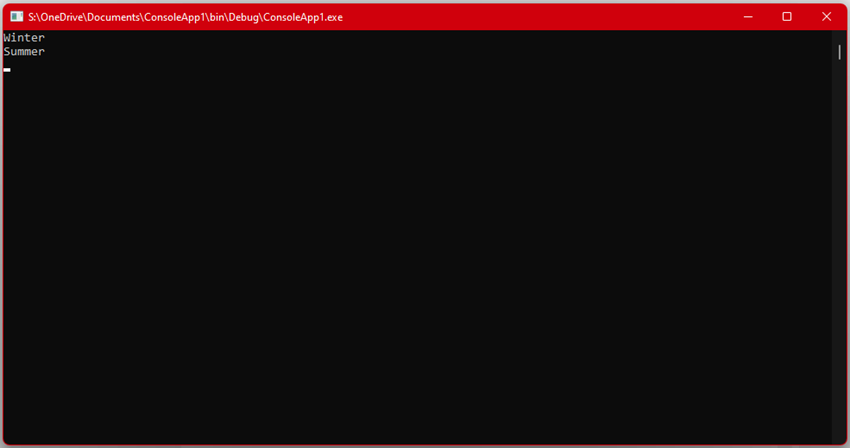

Now let's take a look at the other functions of this package.

We can get the current season in the north/south hemisphere.

Code

Console.WriteLine(Api.Get_Curent_Season_Northern_Hemisphere_As_String());

Console.WriteLine(Api.Get_Curent_Season_Southern_Hemisphere_As_String());

Output

At the time of writing the article, it's Winter in the north and summer in the south.

We can also get images of various seasons using the following code.

You will have to pass a random value between 1 to 5.

Image image = Api.GetSummerImage(2);

Image winter = Api.GetWinterImage(3);

Image autumn = Api.GetAutumnImage(5);

Image spring = Api.GetSpringImage(4);

image.Save("C:\\test\\Summer.png");

winter.Save("C:\\test\\Winter.png");

autumn.Save("C:\\test\\autumn.png");

spring.Save("C:\\test\\Spring.png");

Console.WriteLine("Files Saved..");

Console.Read();

Let's check the folder.

There are a few files created!

And they show pics of the different seasons of nature.

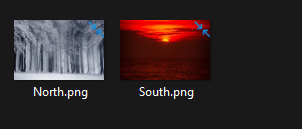

You can also get an image based on the season in the northern and the southern hemisphere.

Use this code:

Again you will need to specify a random number between 5 to get the image.

Image image_north = Api.Get_Curent_Season_Image_Northern_Hemisphere(4);

Image image_south = Api.Get_Curent_Season_Image_Southern_Hemisphere(2);

image_north.Save("C:\\North.png");

image_south.Save("C:\\South.png");

Output

There are two files created.

At the time of writing the season in the north it is Winter and it's summer in the south.

By using just two lines of code we can get a random image based on the current season and the value given.

It's easy right.

In this article, we have learned how to get the weather info and how to get not only the current season but also how to get the image of a season or get the image using the current season using C#.

This works on all the .net core projects such as ASP.NET, Xamarin.Forms, WinForms, WPF, .NET MAUI.

Please download the nuget package used in this article.

Link: https://www.nuget.org/packages/Plugin.Weather/

(OR)

Execute the following command in the package console.

PM> Install-Package Plugin.Weather -Version 2.0.0

European best, cheap and reliable ASP.NET hosting with instant activation. HostForLIFE.eu is #1 Recommended Windows and ASP.NET hosting in European Continent. With 99.99% Uptime Guaranteed of Relibility, Stability and Performace. HostForLIFE.eu security team is constantly monitoring the entire network for unusual behaviour. We deliver hosting solution including Shared hosting, Cloud hosting, Reseller hosting, Dedicated Servers, and IT as Service for companies of all size.