Identity Server4 is an open-source authentication provider with OpenID connect and OAuth2.0 framework for ASP.NET Core. It acts as a centralized authentication provider or security token server (STS). It will be ideal to go through layers when you have multiple API/microservices applications and you should have single security token server to handle the authentication so that you really don’t have to define the Authentication in each and every application.

Identity server4 is a simple and straightforward STS. The user uses the clients (ASP.NET MVC or angular or react application and so on) to access the data, these users are authenticated by Identity Server to use the client. After successful authentication, the Identity server will send a token to client. Then client should use this token to access the data from the APIs.

Identity server4 provides the flexibility to use external storage like relational database, NoSQL, or in-memory data storage. NCache, being a distributed and scalable in-memory key-value data store is a great fit for IdentityServer4. In this article, you will learn how to use NCache as external in-memory storage for identityServer4. The main advantage of using external in-memory storage is to improve the application performance

Advantages of using Identity Server4

Secure your resources

Authenticate users either using a local account store or an external provider

Validate tokens

What is NCache?

NCache is an Open Source in-memory distributed cache for .NET, Java, and Node.js. NCache is extremely fast and linearly scalable and caches application data to reduce expensive database trips. Use NCache to remove performance bottlenecks related to your data storage and databases and scale your .NET, Java, and Node.js applications to extreme transaction processing (XTP).

Using NCache as IdentityServer4

NCache can be used with IdentityServer in the following ways.

NCache as IdentityServer4 Store

NCache as IdentityServer4 Cache

1. NCache as an In-Memory IdentityServer4 Store

NCache acts as a caching layer on top of the IdentityServer 4 persistence configuration and operational stores to speed up the operation through in-memory caching and also reduce the frequent database hits.

NCache as an IdentityServer4 Store, stores information about the clients, Identity resources and APIs etc. You have an option to choose NCache as one store or both of them.

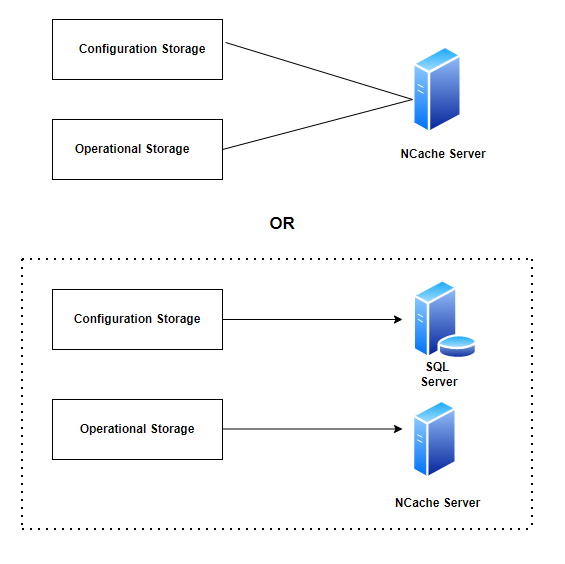

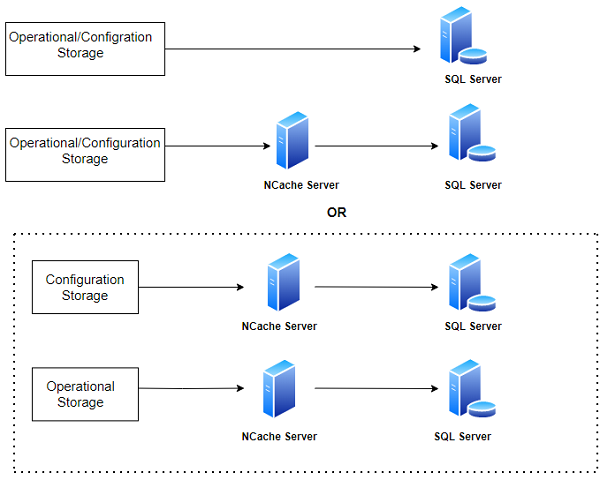

The below figure will give you a complete understanding of NCache as an In-Memory IdentityServer4 Store.

Option 1

NCache store acts as both a configuration and operational store

Option 2

Configuration Storage as a SQL Server or some other storage and the Operational storage as an NCache Server.

Note: Both cache and store roles of NCache with IdentityServer4 can also be a mix and match using the implementation from IIdentityServerBuilder NCache extension for ASP.NET Core.

What is configuration store?

A store where the static data is stored which doesn’t change frequently.

What is an operational store?

A store where the operational data is kept on which operations are performed and the changes are applied more frequently.

Configure NCache as IdentityServer4 Store

For a demo, I have used my existing ASP.NET Core IdentityServer4 application, you can download it from GiHub.

Download and install “Alachisoft.NCache.IdentityServer4” package from NuGet Package Manager

Create a new Startup file “StartupNCache.cs” and add the following code.

public void ConfigureServices(IServiceCollection services) {

services.AddControllersWithViews();

var builder = services.AddIdentityServer().AddTestUsers(TestUsers.Users)

/// Add NCache as a configuration store

.AddNCacheConfigurationStore(options => {

options.CacheId = _configuration["NCacheConfiguration:CacheId"];

var serverList = _configuration["Servers"].Split(',').Select(x => x.Trim()).ToList().Select(y => new NCacheServerInfo(y, 9800)).ToList();

options.ConnectionOptions = new NCacheConnectionOptions {

ServerList = serverList,

EnableClientLogs = true,

LogLevel = NCacheLogLevel.Debug

};

})

/// Add NCache as an operational store

.AddNCachePersistedGrantStore(options => {

options.CacheId = _configuration["NCacheConfiguration:CacheId"];

var serverList = _configuration["NCacheConfiguration:Servers"].Split(',').Select(x => x.Trim()).ToList().Select(y => new NCacheServerInfo(y, 9800)).ToList();

options.ConnectionOptions = new NCacheConnectionOptions {

ServerList = serverList,

EnableClientLogs = true,

LogLevel = NCacheLogLevel.Debug

};

}).AddNCacheDeviceCodeStore(options => {

options.CacheId = _configuration["NCacheConfiguration:CacheId"];

var serverList = _configuration["NCacheConfiguration:Servers"].Split(',').Select(x => x.Trim()).ToList().Select(y => new NCacheServerInfo(y, 9800)).ToList();

options.ConnectionOptions = new NCacheConnectionOptions {

ServerList = serverList,

EnableClientLogs = true,

LogLevel = NCacheLogLevel.Debug

};

})

}

In appsettings.json file, modify the value of the CacheId key to the name of the cluster cache you are using. For multiple Servers keys, use a comma separated list of one or more IP addresses belonging to the NCache servers.

"NCacheConfiguration": {

"CacheId": "demoCache",

"Servers": "[your server adddress]"

},

Replace [your server address] with your server address used for Clustred Caches in my case it is 192.168.1.2

Define the new startup file in program.cs file

webBuilder.UseStartup<StartupNCache>();

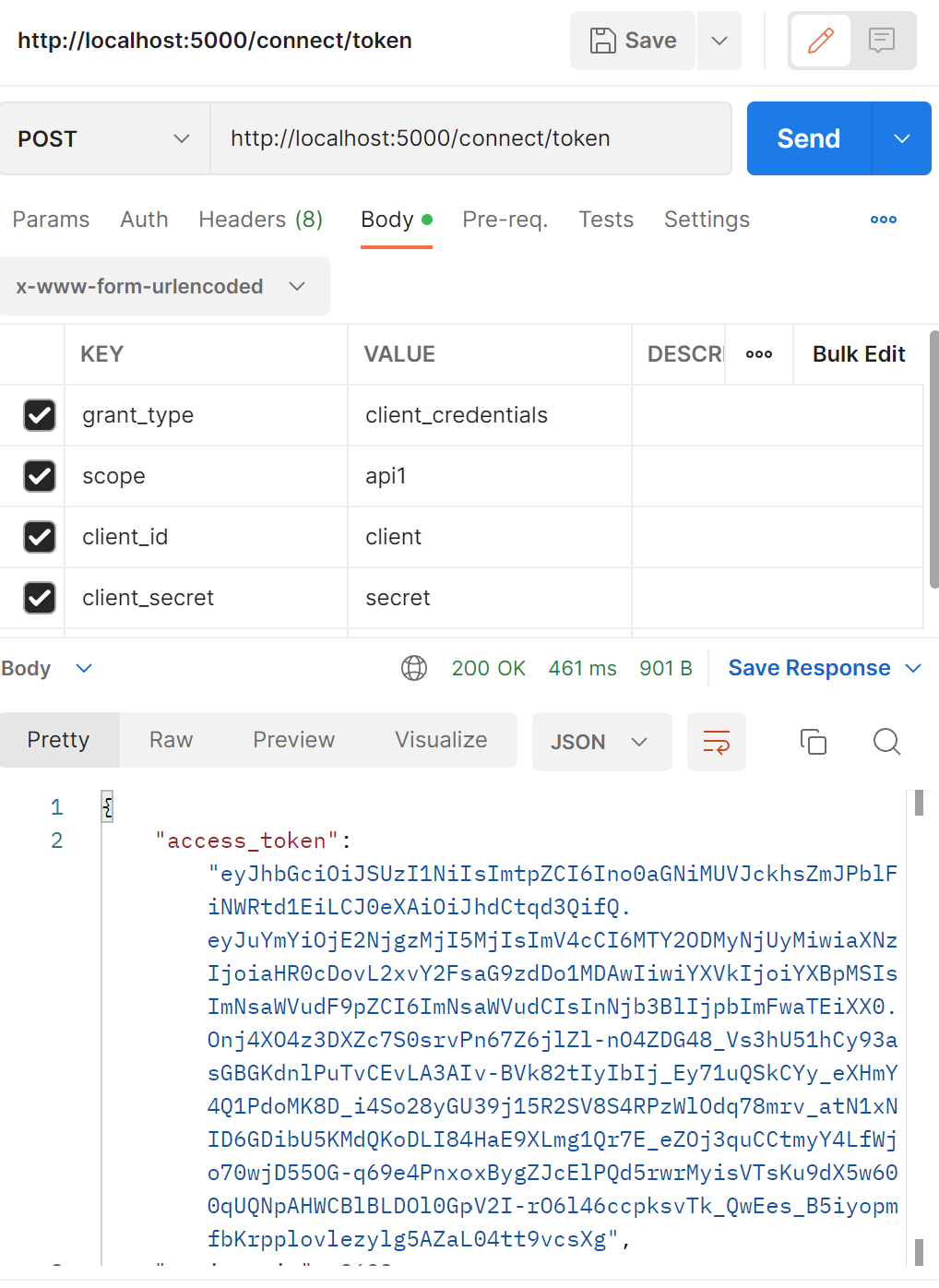

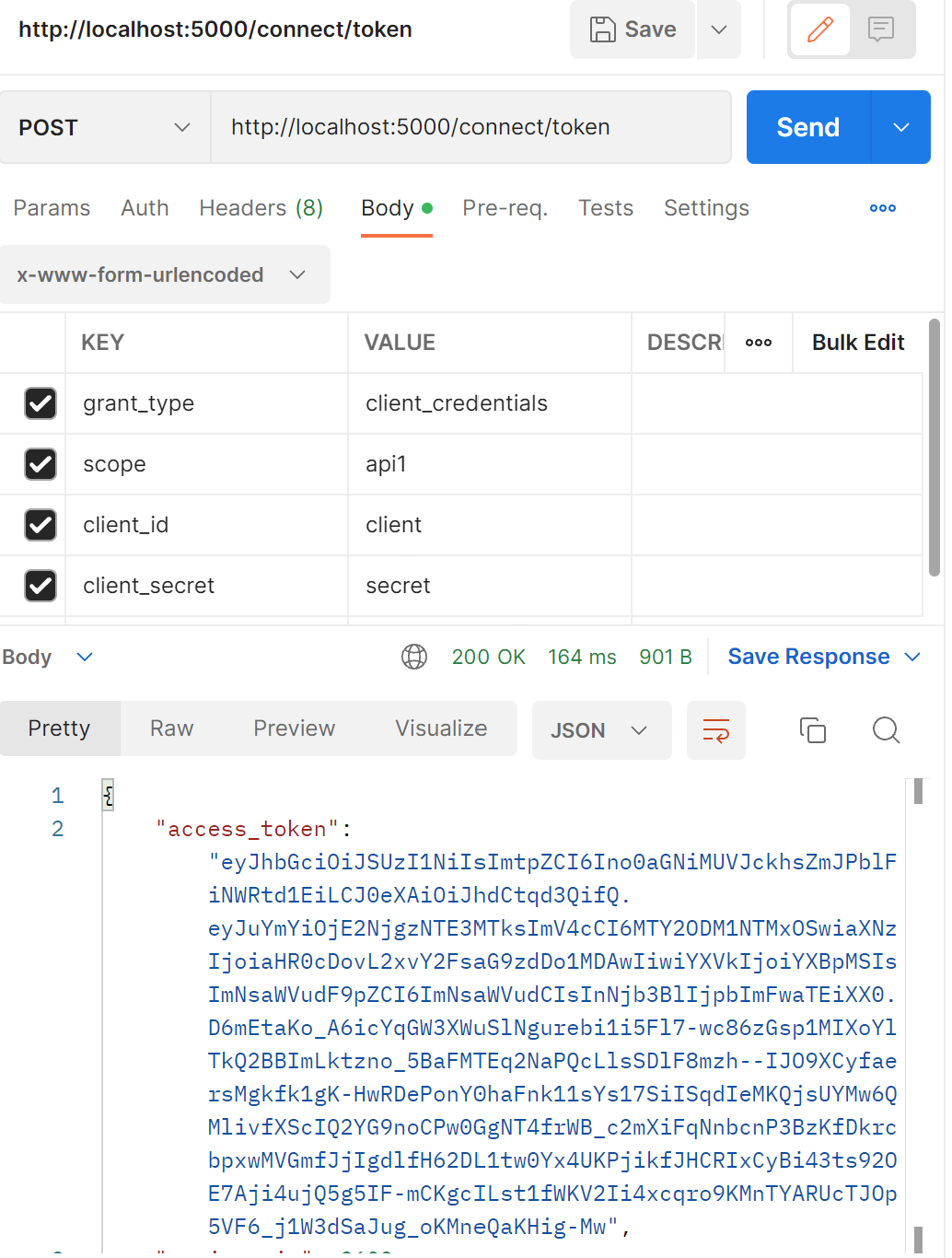

Run the application, let's test the token generation from postman.

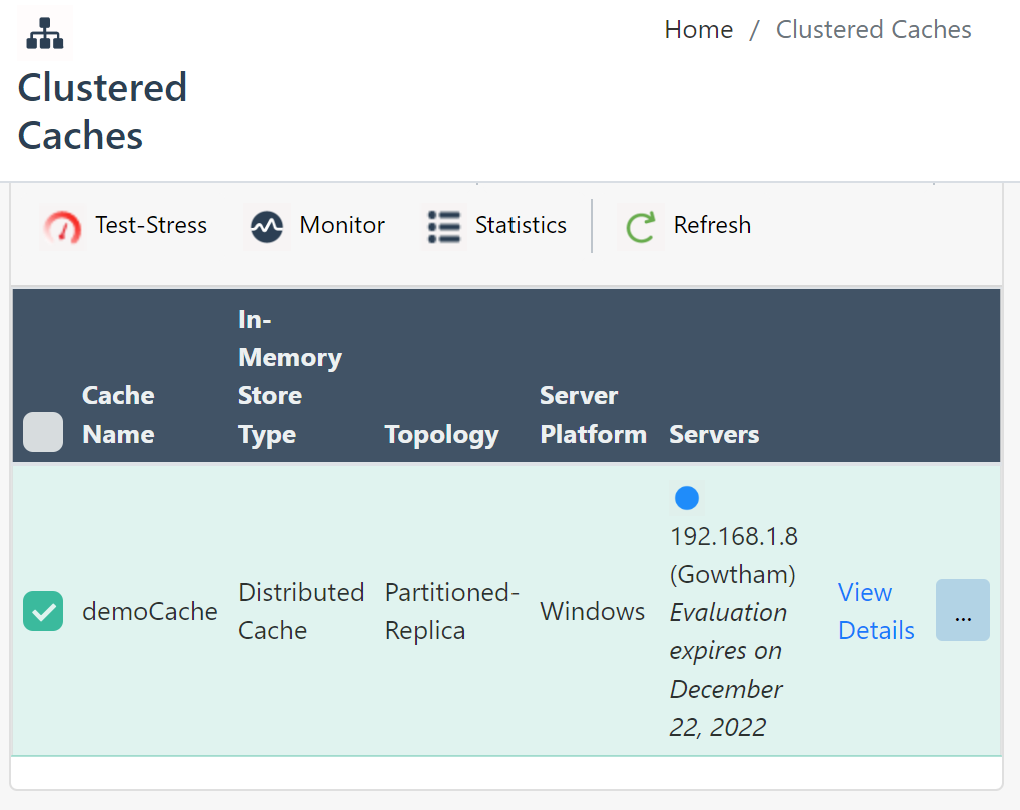

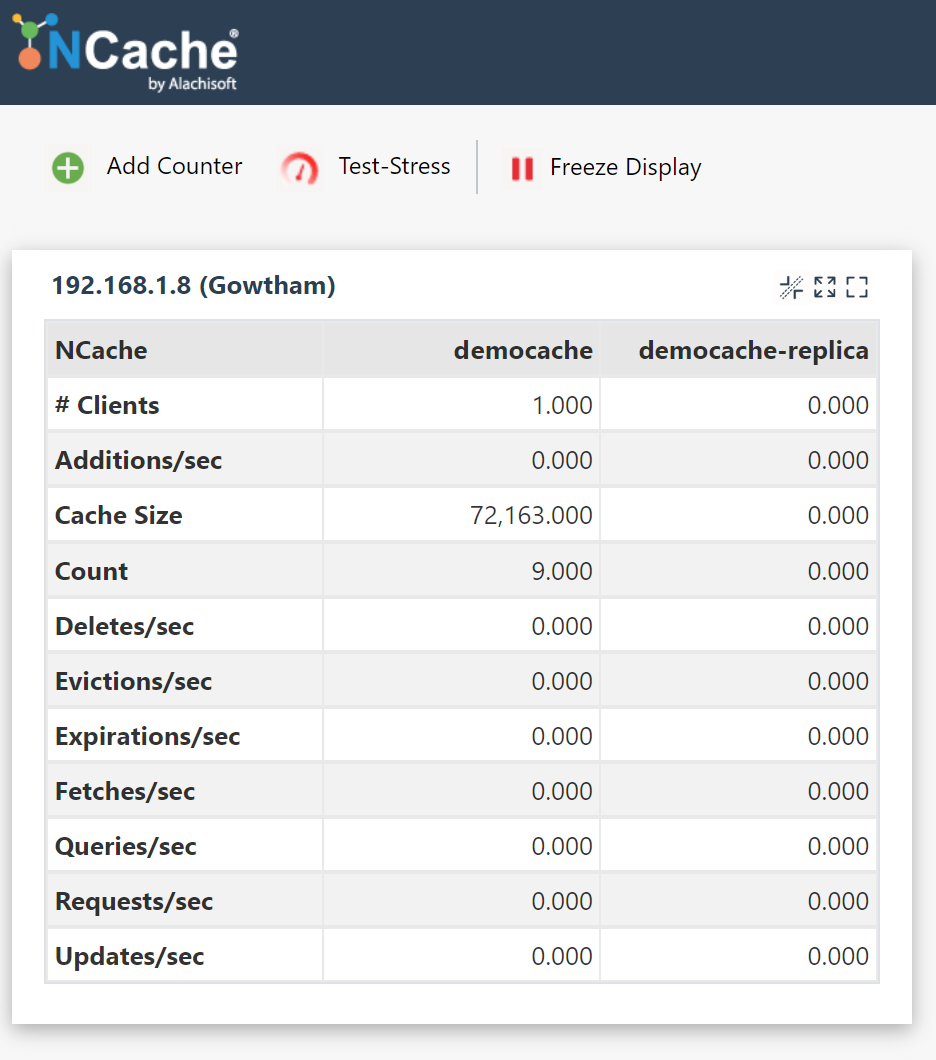

From the above figure you can observe, the ASP.NET Core application with NCache as IdentityServer4 running under port 5000 and the token has been successfully generated from Postman tool. Now open NCache web manager to check the statistics.

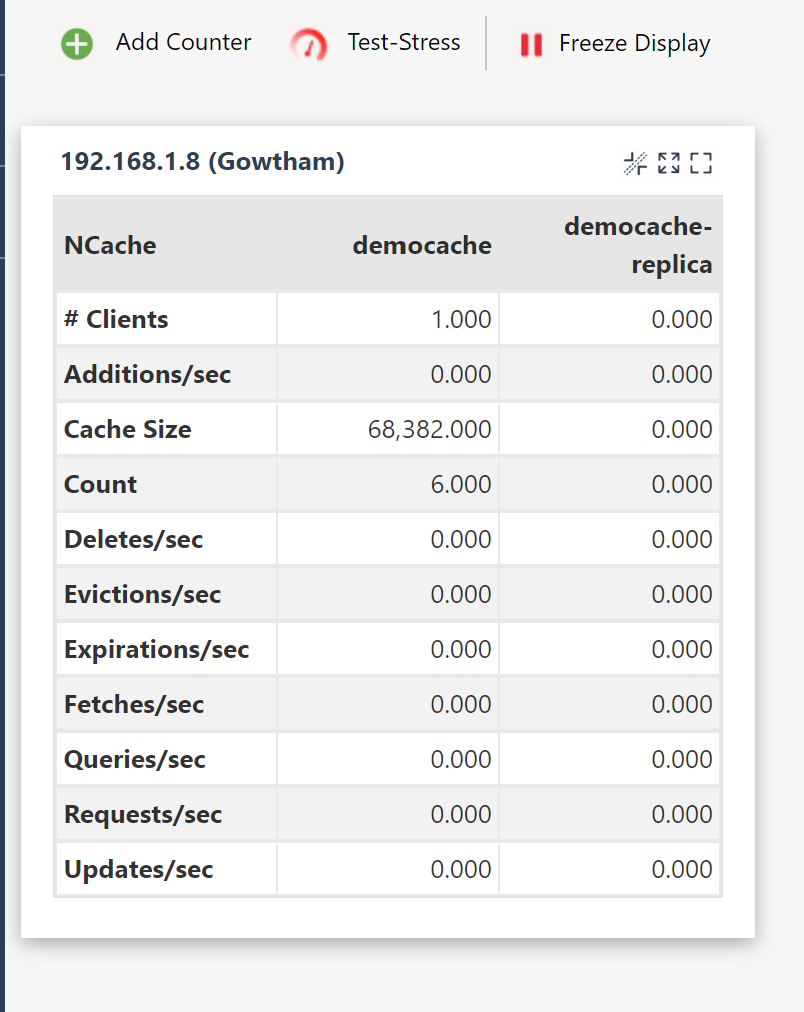

From the above statistics, the clients parameter gives you the no of connection established count, In my case, I have connected only one application to the NCache clustered cache, so the count is 1.

The count parameter gives you the number of key value pair cached.

2. NCache as an In-Memory IdentityServer4 Cache Implementation

NCache can be used as a configuration and persistent grant store, which increases performance by removing the bottleneck to get data from a disk. The in-memory content of the cache can then be periodically persisted to disk, this will reduce the average time taken to get the data.

NCache’s core functionality is to cache data persisting in your data source for faster access and better performance. While you can use NCache as your data store, you can also use it between your data store and the application by caching the configuration and/or the operational data in NCache.

The below figure will give you a complete understanding of NCache as an In-Memory IdentityServer4 Cache.

Install the following NuGet package to your application:

"Alachisoft.NCache.IdentityServer4""

Create a new file StartupEFCore and add the following code,

Download and install “IdentityServer4.NCache.Options” package from NuGet package manager.

public void ConfigureServices(IServiceCollection services) {

...

var builder = services.AddIdentityServer().AddTestUsers(TestUsers.Users)

/// Add NCache as a configuration store

.AddNCacheCaching(options => {

options.CacheId = _configuration["NCacheConfiguration:CacheId"];

var serverList = _configuration["Servers"].Split(',').Select(x => x.Trim()).ToList().Select(y => new NCacheServerInfo(y, 9800)).ToList();

options.ConnectionOptions = new NCacheConnectionOptions {

ServerList = serverList

};

options.DurationOfBreakInSeconds = 120;

})

/// Add NCache as a persisted operational grant store

.AddClientStoreCache < ClientStore > ().AddResourceStoreCache < ResourceStore > ().AddNCacheCorsPolicyCache < CorsPolicyService > ().AddNCachePersistedGrantStoreCache < PersistedGrantStore > (options => {

options.CacheId = _configuration["NCacheConfiguration:CacheId"];

var serverList = _configuration["Servers"].Split(',').Select(x => x.Trim()).ToList().Select(y => new NCacheServerInfo(y, 9800)).ToList();

options.ConnectionOptions = new NCacheConnectionOptions {

ServerList = serverList

};

options.DurationOfBreakInSeconds = 120;

})

/// NCache as an IProfileService default implementation

.AddNCacheProfileServiceCache < TestUserProfileService > (options => {

options.Expiration = TimeSpan.FromMinutes(10);

options.KeyPrefix = "NCache-";

options.KeySelector = (context) => context.Subject.Claims.First(_ => _.Type == "sub").Value;

options.ShouldCache = (context) => true;

});

}

In appsettings.json file, modify the value of the CacheId key to the name of the cluster cache you are using. For multiple Servers keys, use a comma separated list of one or more IP addresses belonging to the NCache servers.

{

"NCacheConfiguration": {

"CacheId": "demoCache",

"Servers": "192.168.1.8"

},

"ConnectionStrings": {

"db": "server=;database=IdentityServerNCacheLocal;UserId=userid;Password=password;"

}

}

Run the application, the client information are stored in the database. Since we have NCahce with configurational and operational storage the data will be cached by NCache Clustered cache, so that we can skip the database transaction to improve our application performance.

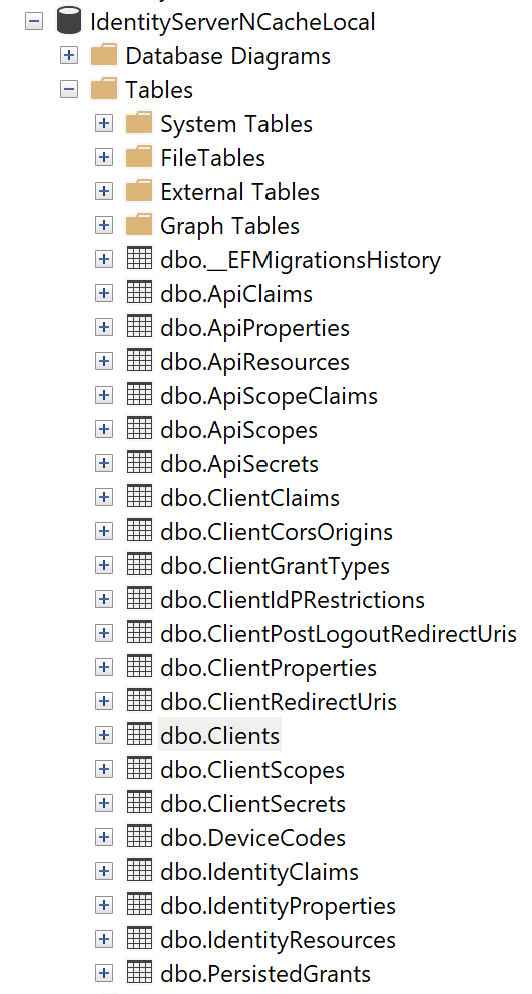

Database tables

Get the token using Postman

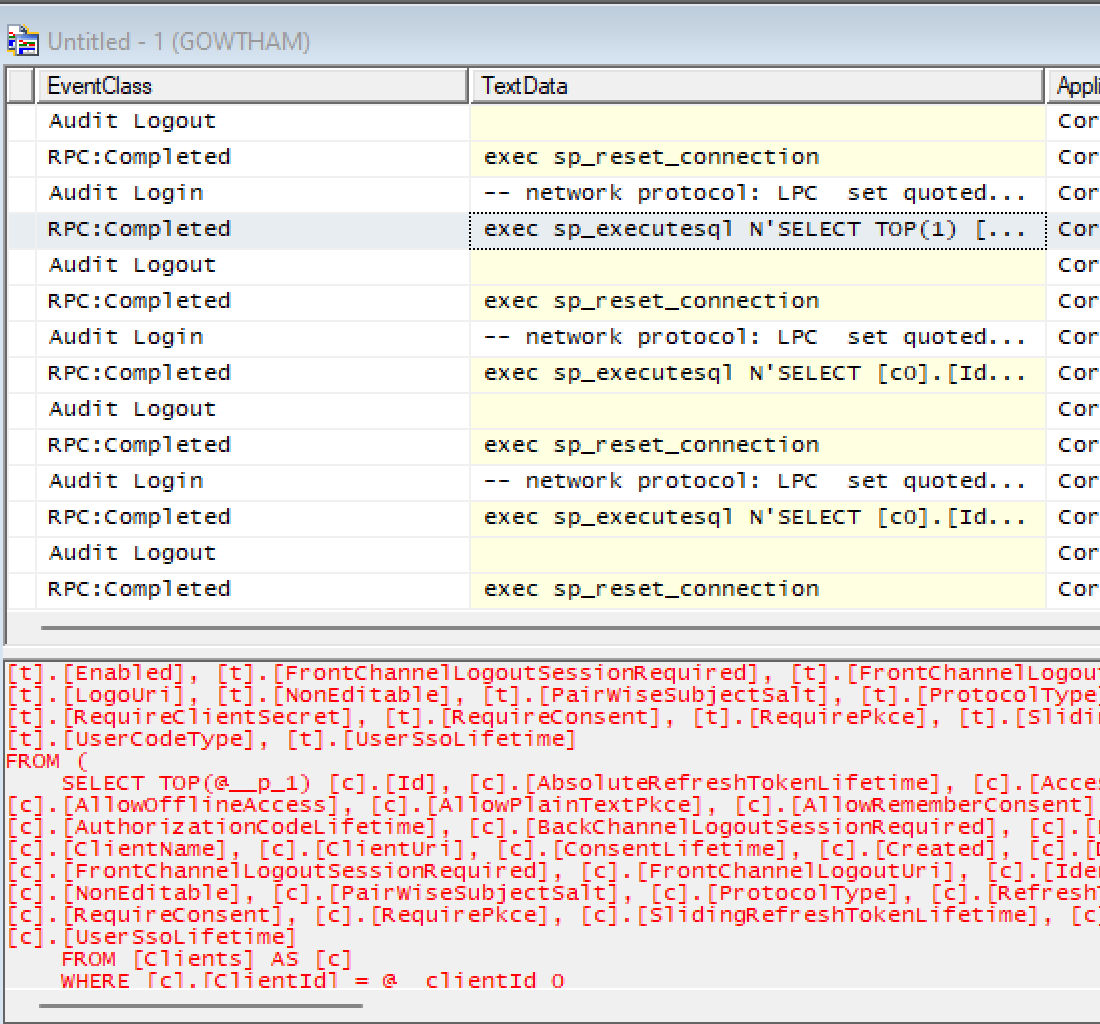

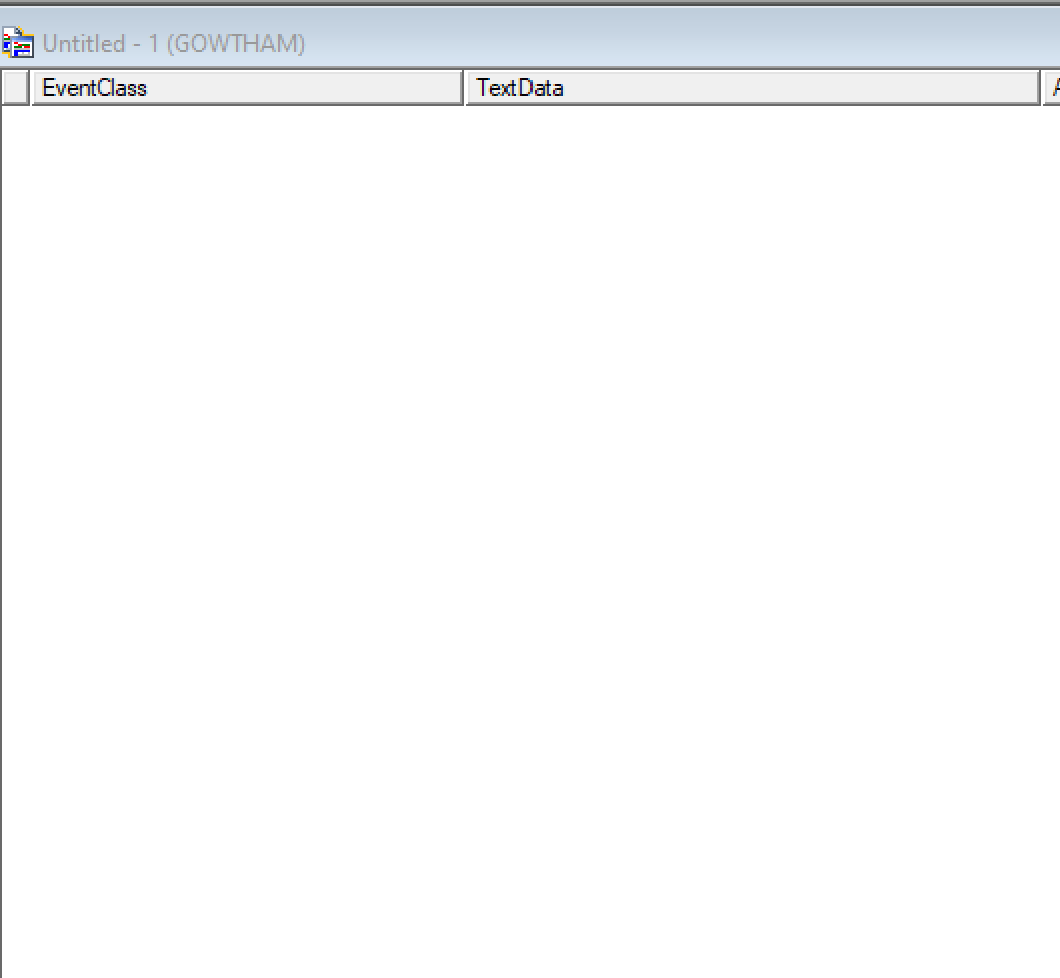

Trace of the database transaction given below, while getting a token using Postman.

First time it will hit a database to generate a new token.

Check the Cache count in NCache web Manager

Previously the count is 6, and now the count is 9 and the cache size also increased, which means the token has been cached to NCache Clustered cache. Next time when you try to get a token, there won't be any database transaction

Once again get the token from Postman, now the token and other details are from NCacher server.

In the profiler no transaction has been recorded, which means no database transaction had happened when you try to get the token for second time. This approach will help us to improve the application performance by reducing the database trips.

Implementing the OpenId Connect using IdentitServer4 will secure our application by authenticating the user, on top of it by integrating the NCache as an external storage will improve your application performance and also, it’s provided you a lot of features by acting as IdentityServer Cache and store.

European best, cheap and reliable ASP.NET hosting with instant activation. HostForLIFE.eu is #1 Recommended Windows and ASP.NET hosting in European Continent. With 99.99% Uptime Guaranteed of Relibility, Stability and Performace. HostForLIFE.eu security team is constantly monitoring the entire network for unusual behaviour. We deliver hosting solution including Shared hosting, Cloud hosting, Reseller hosting, Dedicated Servers, and IT as Service for companies of all size.